© Felix Noak

© Felix Noak

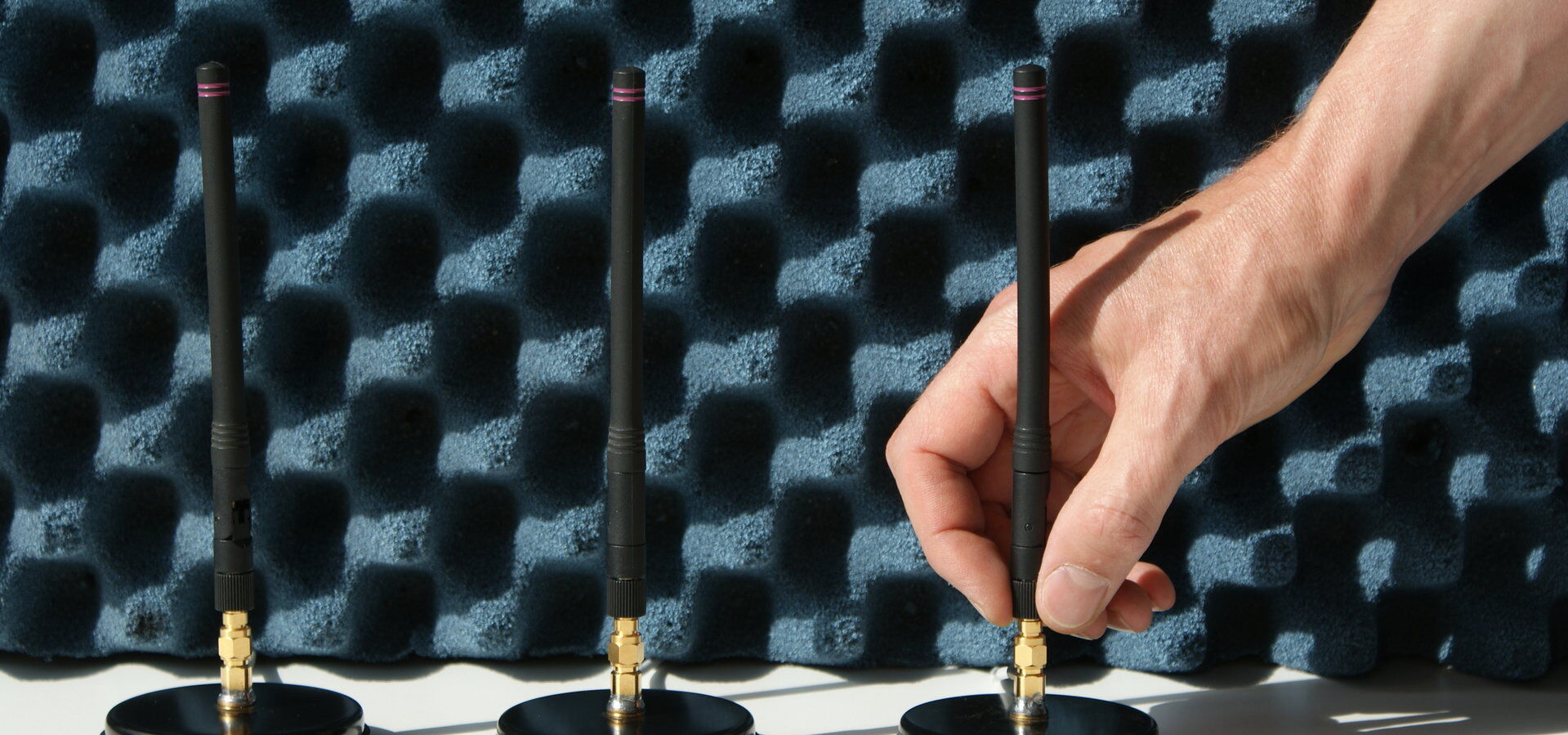

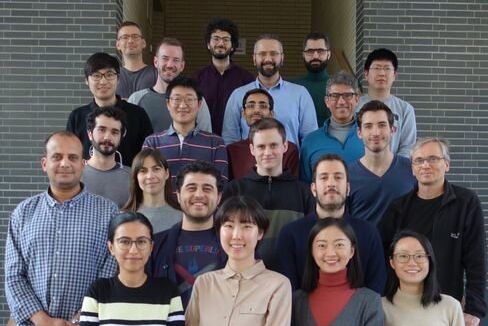

Das Fachgebiet Theoretische Grundlagen der Kommunikationstechnik (CommIT) hat eine langjährige Geschichte an der TU Berlin. Im April 2014 erhielt Prof. Giuseppe Caire die Alexander von Humboldt Professur und leitet seitdem das Fachgebiet. CommIT konzentriert sich auf die Grundlagenforschung sowie auf die angewandte Forschung der Kommunikationstheorie, Informationstheorie, Signalverarbeitung und Netzwerke. Ein besonderer Schwerpunkt liegt hierbei auf dem Bereich der drahtlosen Kommunikationssysteme.

Studenten der Bachelor- und Masterstudiengänge (B.Sc./M.Sc.), die sich auf diese Gebiete spezialisieren wollen, können für weitere Informationen gerne unsere Mitarbeiter kontaktieren. Mögliche Projekte für Ihre Masterarbeit (M.Sc.) finden sie hier. Falls Sie an einer Tätigkeit in unserem Fachgebiet interessiert sind, finden sie hier mögliche Stellenangebote.

Für mehr Informationen über die Wissenschaftsfelder des Fachgebietes Theoretische Grundlagen der Kommunikationstechnik (CommIT), klicken Sie bitte in das Menü links auf den Bereich Forschung und auf die Webseiten der IEEE Information Theory Society, IEEE Communications Society, und IEEE Signal Processing Society.

News

Gemeinsamer Workshop von TUB und Huawei zur Kommunikationstechnologie

CommIT wird am May 6, 2024 einen von Huawei gesponserten Workshop über Kommunikationstechnologie mit dem Titel "Integrated Sensing and Communications: Information theory, signal processing, and applications" veranstalten.

Weitere Informationen finden Sie auf der Workshop-Webseite.

2023 Comsoc CTTC-Auszeichnung für technische Errungenschaften

Im Namen des Preiskomitees wurde Professor Giuseppe Caire als Gewinner des Preises 2023 IEEE Communications Society Communication Theory Technical Committee (CTTC) Technical Achievement Award ausgewählt.

Vortrag von Professor Meir Feder von der Universität Tel-Aviv

Wir freuen uns, am kommenden Mittwoch, den 12. Juli 2023, einen Vortrag von Prof. Meir Feder von der Universität Tel-Aviv in unserem Seminarraum HFT 617 präsentieren zu können.

Zeit: Mi., 12. Juli 2023, 11:00 Uhr

Ort: HFT-Gebäude, Raum HFT-TA 617, Einsteinufer 25, 10587 Berlin

Titel: Multiple und Hierarchische Universalität

Gemeinsamer Workshop von TUB und Huawei zur Kommunikationstechnologie

Commit wird einen von Huawei gesponserten Workshop über Kommunikationstechnologie mit dem Titel "How to achieve 1Tbps in Wireless?" veranstalten.

Weitere Informationen finden Sie auf der Workshop-Webseite.

Standort

Kontakt

| Sekretariat | Stellenzeichen |

|---|---|

| Gebäude | Hauptgebäude |

| Raum | H 0815 |

| Adresse | Technische Universität Berlin, Straße des 17. Juni 135 10623 Berlin |

| Mo | 9:00-12:00 |

| Di | 8:00-11:00 |

© CommIT

© CommIT

© Philipp Arnoldt

© Philipp Arnoldt

© Philipp Arnoldt

© Philipp Arnoldt